Hyperparameter Optimization with Factorized Multilayer Perceptrons

Experimental Evaluation for Hyperparameter Tuning Strategies

Hint: Press a button to hide/show a hyperparameter tuning strategy in the plot.

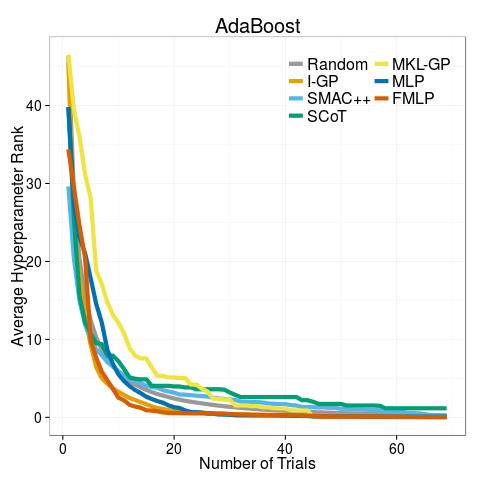

Average hyperparameter rank results on HyLAP_AdaBoost

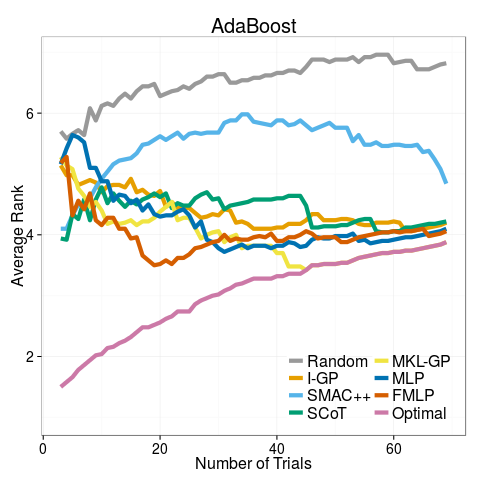

Average rank results on HyLAP_AdaBoost

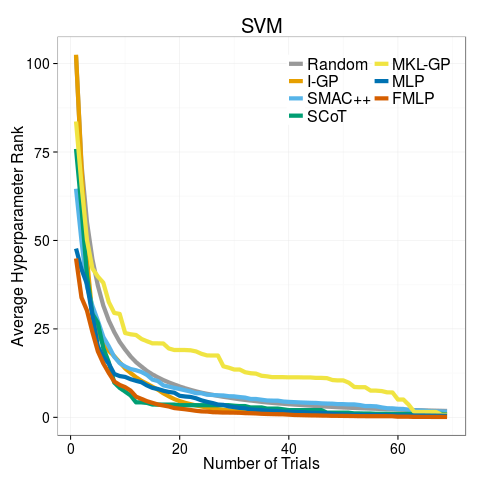

Average hyperparameter rank results on HyLAP_SVM

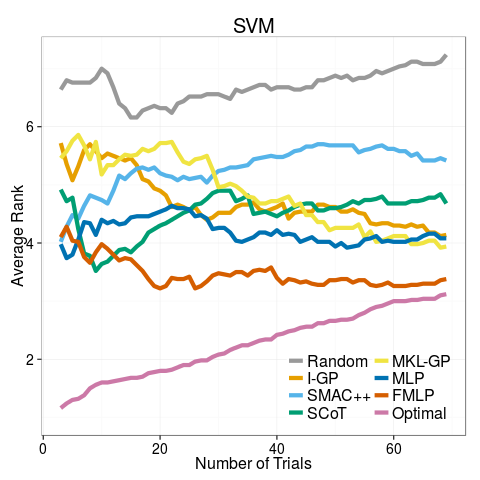

Average rank results on HyLAP_SVM

Experiment Protocol with detailed hyperparameter grids

Description

This page provides the hyperparameter grids for the experiments shown in the paper.

Reconstruction of Response Surface

Random Forest

- α in { 0.0001, 0.0005, 0.001, 0.005, 0.01, 0.05, 0.1 }

- Number of Trees T in { 1, 2, 5, 10, 20, 50, 100, 200, 500, 1000 }

SVM Regression

- Tradeoff Parameter C in 2x for x in { -5,-4,....,4 }

- Kernel Width γ in { 1, 0.5, 0.1, 0.05, 0.01, 0.005, 0.001, 0.0005, 0.0001 }

Factorization Machine

- Learn Rate η in { 0.01,0.001,0.0001 }

- Regularization Constant λ in { 0.1,0.01,0.001,0.0001 }

- Initial Standard Deviation σ in { 0.1,0.01,0.001,0.0001 }

- Number of Latent Features K in { 2,5,8 }

Multilayer Perceptron

- Learn Rate η in { 0.1,0.01,0.001 }

- Momentum λ in { 0.1,0.01,0.001 }

- Number of Layers L in { 2,5 }

- Number of Nodes per Layer N in { 2,5 }

Factorized Multilayer Perceptron

- Learn Rate η in { 0.1,0.01,0.001 }

- Momentum λ in { 0.1,0.01,0.001 }

- Number of Layers L in { 2,5 }

- Number of Nodes per Layer N in { 2,5 }

- Number of Latent Features K in { 2,5,8 }

Sequential Model Based Optimization

SMAC++

- α in { 0.0001, 0.0005, 0.001, 0.005, 0.01, 0.05, 0.1 }

- Number of Trees T in { 1, 2, 5, 10, 20, 50, 100, 200, 500, 1000 }

SCoT

- Tradeoff Parameter C in 1x for x in { -4,....,0 }

MKL-GP

- Number of Neighbors K in { 2 } (suggested by authors)

- α in { 0.3 } (suggested by authors)

Multilayer Perceptron (best hyperparameters overall of Experiment 1)

- Learn Rate η in { 0.01 }

- Momentum λ in { 0.01 }

- Number of Layers L in { 5 }

- Number of Nodes per Layer N in { 5 }

Factorized Multilayer Perceptron (best hyperparameters overall of Experiment 1)

- Learn Rate η in { 0.01 }

- Momentum λ in { 0.01 }

- Number of Layers L in { 2 }

- Number of Nodes per Layer N in { 5 }

- Number of Latent Features K in { 8 }